Cluster analysis

NOTE: This page has been revised for Winter 2024, but may undergo further edits.

1 Introduction

“Cluster analysis” is a broad group of multivariate analyses that have as their objective the organization of individual observations (objects, sites, grid points, individuals), and these analyses are built upon the concept of multivariate distances (expressed either as similarities or dissimilarities) among the objects.

The organization generally takes two forms:

- the arrangement of the objects in a lower-dimensional space than the data were originally observed on;

- the development of “natural” clusters or the classifications of the objects.

These analyses share many concepts and techniques (both numerical and practical) with other procedures such as principal components analysis, numerical taxonomy, discriminant analysis and so on, and cluster analysis is one of the few machine learning techniques that uses its traditional name in multivariate analysis as its modern Data Science/Machine Learning name.

The analyses generally begin with the construction of an n x n matrix D of the distances between objects. For example, in a two-dimensional space, the elements dij of D could be the Euclidean distances between points,

dij = [(xi1- xj1)2 + (xi1- xj1)2]½

The Euclidean distance, and related measures are easily generalized to more than two dimensions.

To begin load some packages:

library(sf)

library(ggplot2)

library(RColorBrewer)

library(lattice)

library(GGally)

library(MASS)

library(ncdf4)

library(terra)

library(tidyterra)

library(stringr)

library(gplots)

library(parallelDist)Some of these packages are familiar, but {GGally}

provides some enhanced {ggplot2} plots,

{stringr} manages the manipulation of strings,

{parallelDist} exploits multiple processors/cores to

efficiently calculate large dissimilarlty/distance matrices, and

’{gplots}` calculates heat maps.

2 A simple cluster analysis

The basic idea of cluster analysis can be illustrated using the Oregon climate-station dataset, with the goal being to identify groups of stations with similar climates, which also regionalizes the climate of the state. Begin by reading a shape file for mapping the data, and the data itself.

# read county outlines

# orotl_sf

shapefile <- "/Users/bartlein/Projects/RESS/data/shp_files/OR/orotl.shp"

orotl_sf <- st_read(shapefile)## Reading layer `orotl' from data source `/Users/bartlein/Projects/RESS/data/shp_files/OR/orotl.shp' using driver `ESRI Shapefile'

## Simple feature collection with 36 features and 1 field

## Geometry type: POLYGON

## Dimension: XY

## Bounding box: xmin: -124.5584 ymin: 41.98779 xmax: -116.4694 ymax: 46.23626

## CRS: NAAdd a coordinate reference system and plot:

# add a CRS

st_crs(orotl_sf) <- st_crs("+proj=longlat")

or_otl_sf <- st_geometry(orotl_sf)

plot(or_otl_sf)

Now read a *.csv file, and attach it.

# read stations

orstationc_path <- "/Users/bartlein/Projects/RESS/data/csv_files/"

orstationc_name <- "orstationc.csv"

orstationc_file <- paste(orstationc_path, orstationc_name, sep="")

orstationc <- read.csv(orstationc_file)

summary(orstationc)## station lat lon elev tjan tjul

## Length:92 Min. :42.05 Min. :-124.6 Min. : 2.00 Min. :-6.000 Min. :12.20

## Class :character 1st Qu.:43.60 1st Qu.:-123.2 1st Qu.: 94.25 1st Qu.:-1.225 1st Qu.:17.30

## Mode :character Median :44.46 Median :-121.7 Median : 462.00 Median : 0.750 Median :19.20

## Mean :44.32 Mean :-121.3 Mean : 556.99 Mean : 1.417 Mean :19.01

## 3rd Qu.:45.28 3rd Qu.:-119.6 3rd Qu.: 863.75 3rd Qu.: 4.150 3rd Qu.:20.20

## Max. :46.15 Max. :-117.0 Max. :1974.00 Max. : 8.400 Max. :26.40

## tann pjan pjul pann idnum Name

## Min. : 3.200 Min. : 14.00 Min. : 4.00 Min. : 198.0 Min. :350197 Length:92

## 1st Qu.: 8.700 1st Qu.: 39.75 1st Qu.: 7.00 1st Qu.: 299.0 1st Qu.:352308 Class :character

## Median :10.400 Median : 87.50 Median : 9.00 Median : 531.0 Median :354564 Mode :character

## Mean : 9.885 Mean :141.67 Mean :10.84 Mean : 868.6 Mean :354455

## 3rd Qu.:11.200 3rd Qu.:239.25 3rd Qu.:12.25 3rd Qu.:1355.8 3rd Qu.:356663

## Max. :12.600 Max. :414.00 Max. :34.00 Max. :2517.0 Max. :359316## The following objects are masked _by_ .GlobalEnv:

##

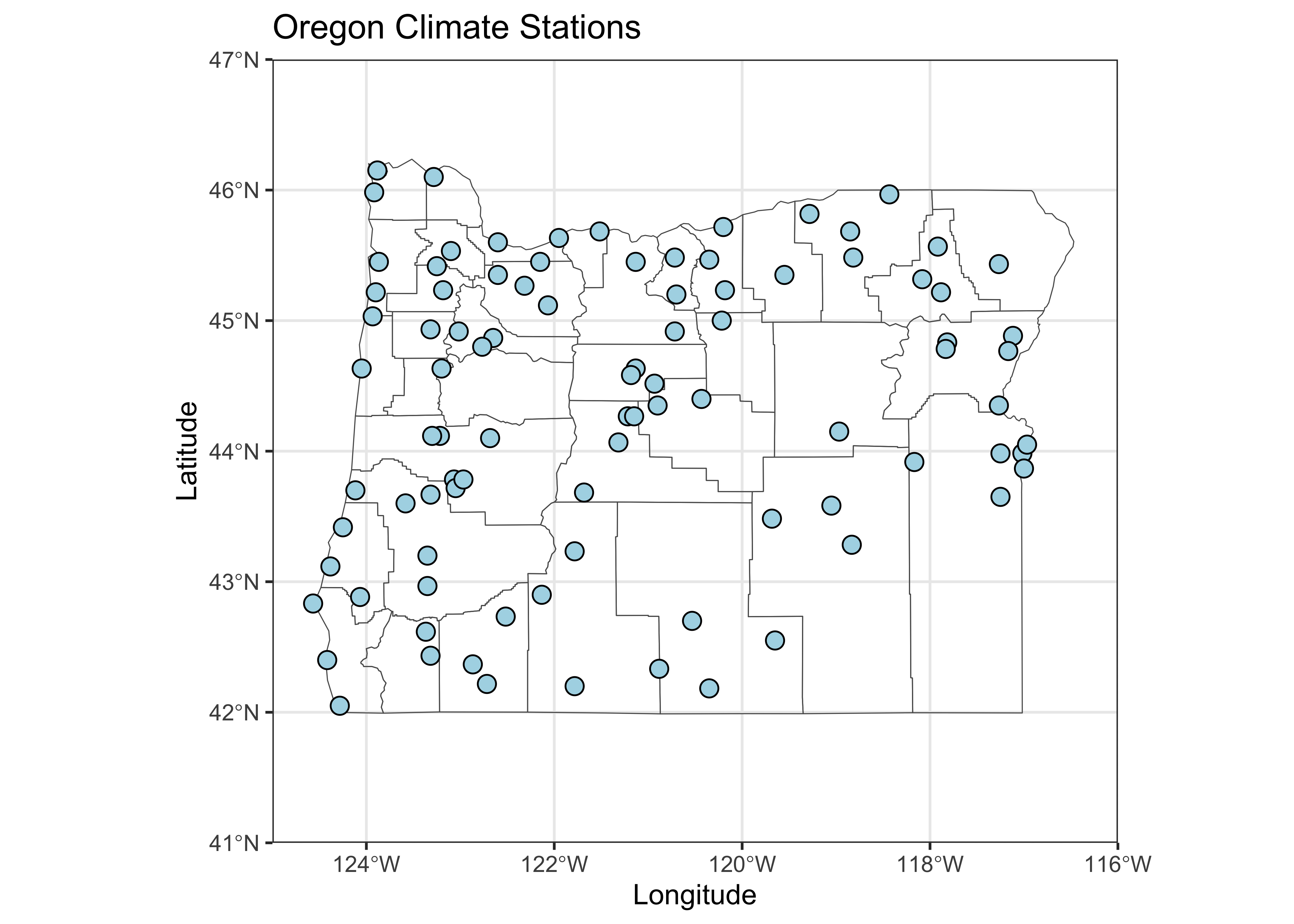

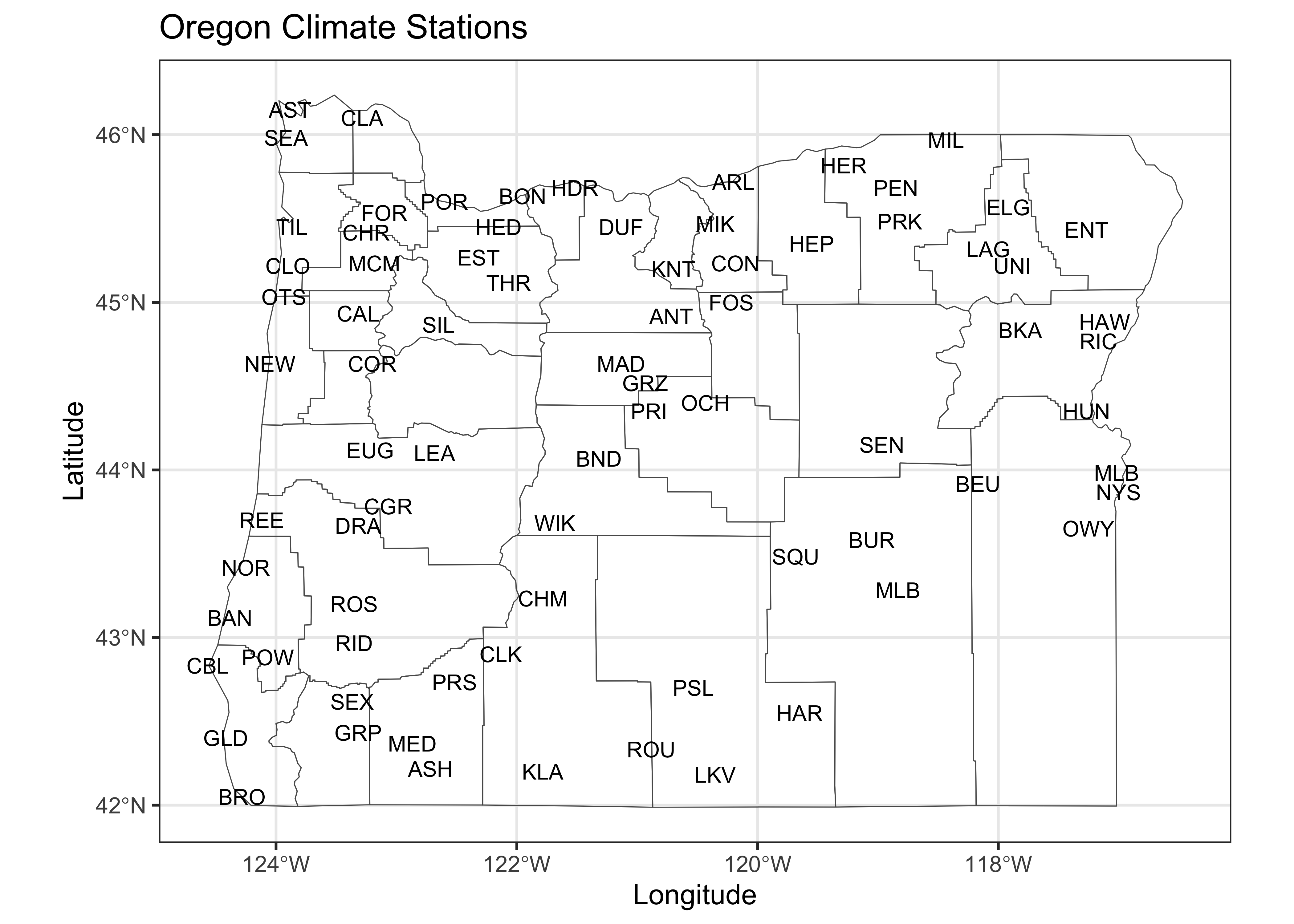

## lat, lonPlot the station locations, and then the three-letter station name

abbreviations. Notice that the use of the coord_sf()

function in the first plot to explicity specify the geographical extent

of the map, while in the second case ggplot2() determines

the extent.

# plot stations

ggplot() +

geom_sf(data = or_otl_sf, fill = NA) +

geom_point(data = orstationc, aes(x=lon, y=lat), shape=21, color="black", size = 3, fill="lightblue") +

coord_sf(xlim = c(-125, -116), ylim = c(41, 47), expand = FALSE) +

labs(title = "Oregon Climate Stations", x = "Longitude", y = "Latitude") +

theme_bw()

# plot station names

ggplot() +

geom_sf(data = or_otl_sf, fill = NA) +

geom_text(data = orstationc, aes(x =lon, y = lat, label = as.character(station)),

check_overlap = TRUE, size = 3) +

labs(title = "Oregon Climate Stations", x = "Longitude", y = "Latitude") +

theme_bw()

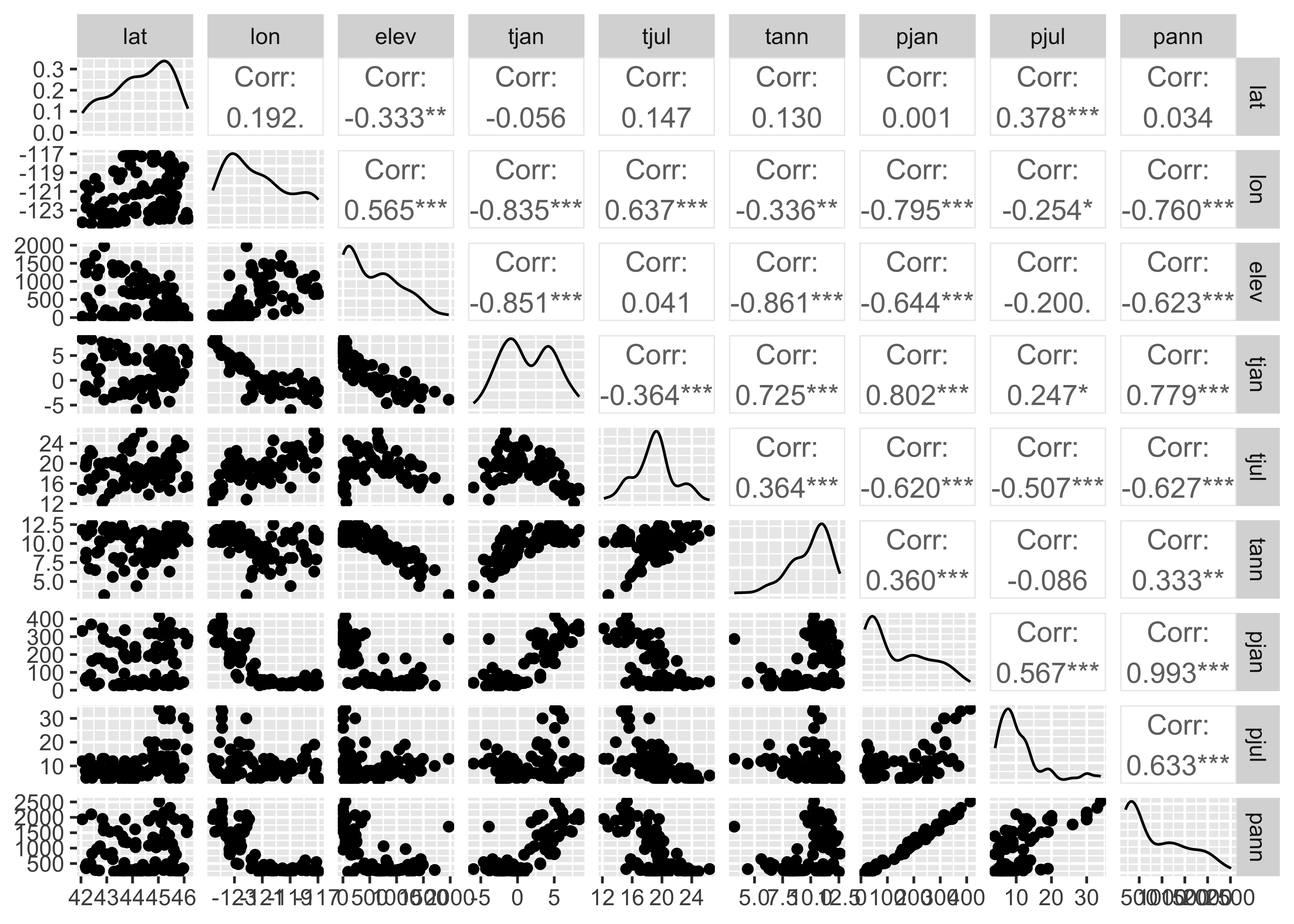

Take a quick look at the correlations among variables.

The off-diagonal cells for the climate variables indicate that there are some strong correlations among the climate variables (the asterisks indicate significance in the usual way).

2.1 Distance/Dissimilarity matrices

In this example, the groups of stations (or the climate regions of

Oregon, as expressed in the climate-station data) will be defined via an

“agglomerative-hierarchical” cluster analysis. In such an analysis,

individual objects (observations) are combined according to their

(dis)similarity, with the two most-similar objects being

combined first (to create a new composite object), then the next two

most-similar objects (including the composites), and so on until a

single object is defined. The sequence of combinations is illustrated

using a “dendrogram” (cluster tree), and this can be examined to

determine the number of clusters. The function cutree()

determines the cluster membership of each observation, which can be

listed or plotted.

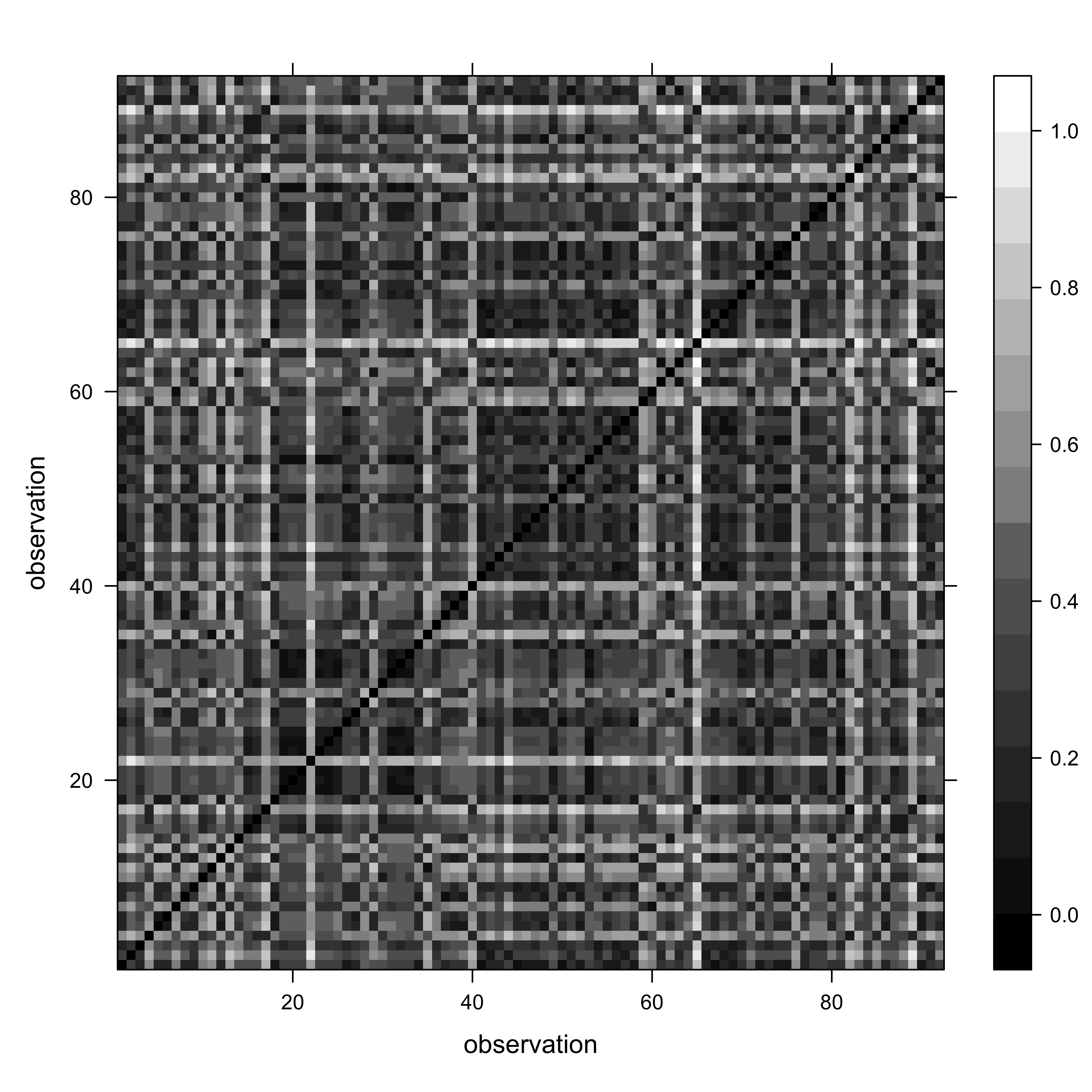

The first step in an agglomerative cluster analysis (although not

always expiclitly displayed), is the calculation of a dissimilarity

matrix, which is a square symmetrical matrix that illustrates the

dissimilarities (typically Euclidean distances) between individual cases

or observations, using in this case the six climate variables to define

the space that distance is calculated in. The levelplot()

funcction from the {lattice} package is used to make the

plot.

# get dissimilarities (distances) and cluster

X <- as.matrix(orstationc[,5:10])

X_dist <- dist(scale(X))

grid <- expand.grid(obs1 = seq(1:92), obs2 = seq(1:92))

levelplot(as.matrix(X_dist)/max(X_dist) ~ obs1*obs2, data=grid,

col.regions = gray(seq(0,1,by=0.0625)), xlab="observation", ylab="observation")

In the code above, for convenience, a matrix X was created to omit the variables we don’t want to include in the clustering (i.e. the non-climatic information). The levelplot() function plots the dissimilarity matrix that the cluster analysis works with. The dark diagonal line is formed from the Eucldean distance values of 0.0 from the comparison of each observation with itself.

There is a range of different styles of patterns that may show up

- If the climates at the individual stations were completely unrelated

to on another, or if they were identical, the plot would appear light

gray (with black pixels along the diagonal).The “plaid” as opposed to

completely random appearance of the plot indicates that it is likely

that natural groups will exist in the data.

- If stations near to one another had similar climates then one would see a plaid pattern.

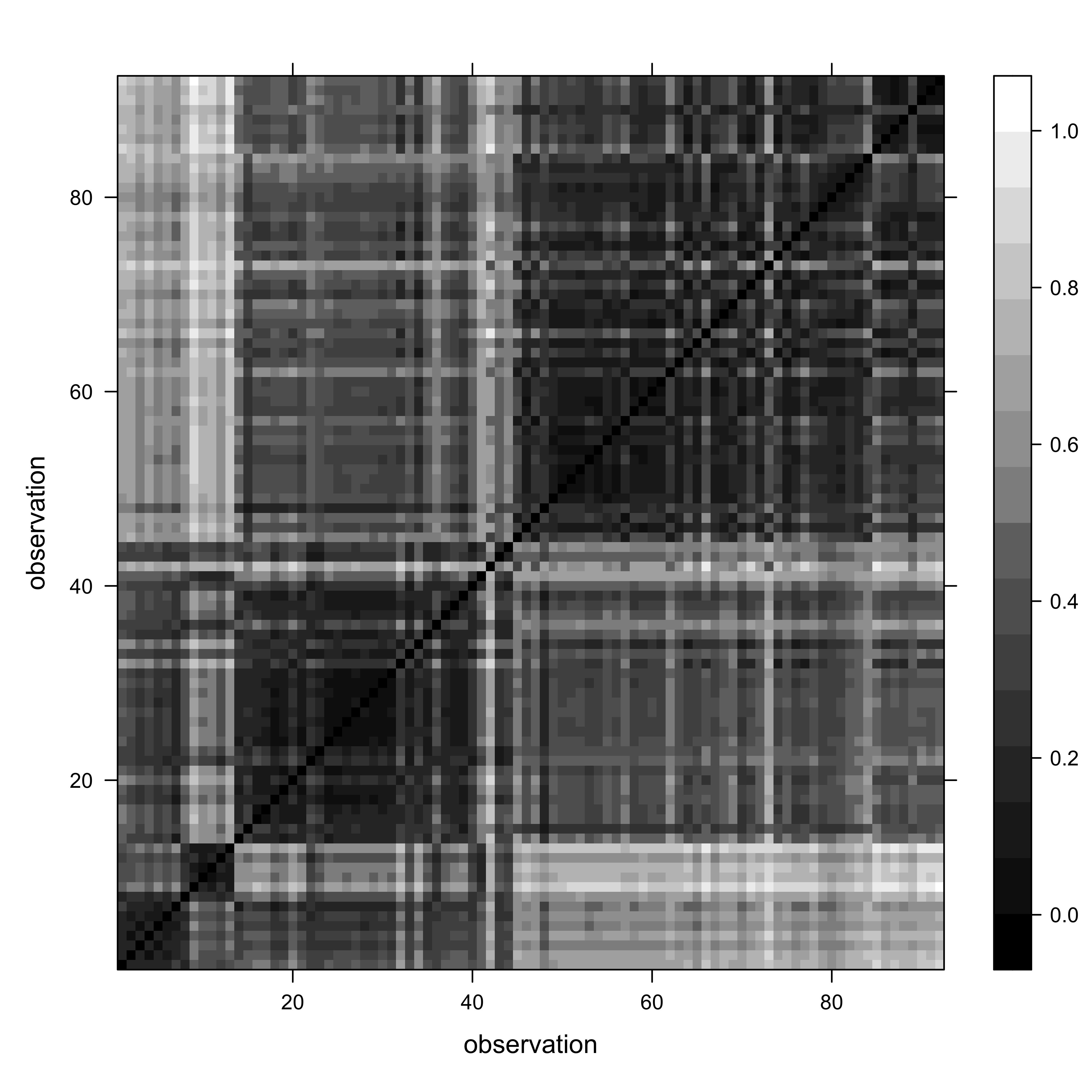

The “plaidness” of the image could be enhanced by sorting the

observations in some way that makes sense. (Right now, they’re in

alphabetical order.) The plot can be redone, this time sorting in to

longitudinal order, West to East

(i.e. orstationc[order(orstationc$lon), 5:10]):

# get dissimilarities (distances) and cluster

X <- as.matrix(orstationc[order(orstationc$lon), 5:10])

X_dist <- dist(scale(X))

grid <- expand.grid(obs1 = seq(1:92), obs2 = seq(1:92))

levelplot(as.matrix(X_dist)/max(X_dist) ~ obs1*obs2, data=grid,

col.regions = gray(seq(0,1,by=0.0625)), xlab="observation", ylab="observation")

The “plaid and blocks” pattern suggests that, as we might have expected, there is a longitudinal trend in Oregon climates.

2.2 The cluster analysis

Get the orignal distance matrix again, and do the cluster analysis:

# distance matrix

X <- as.matrix(orstationc[,5:10])

X_dist <- dist(scale(X))

# cluster analysis

X_clusters <- hclust(X_dist, method = "ward.D2")

plot(X_clusters, labels=station, cex=0.4)

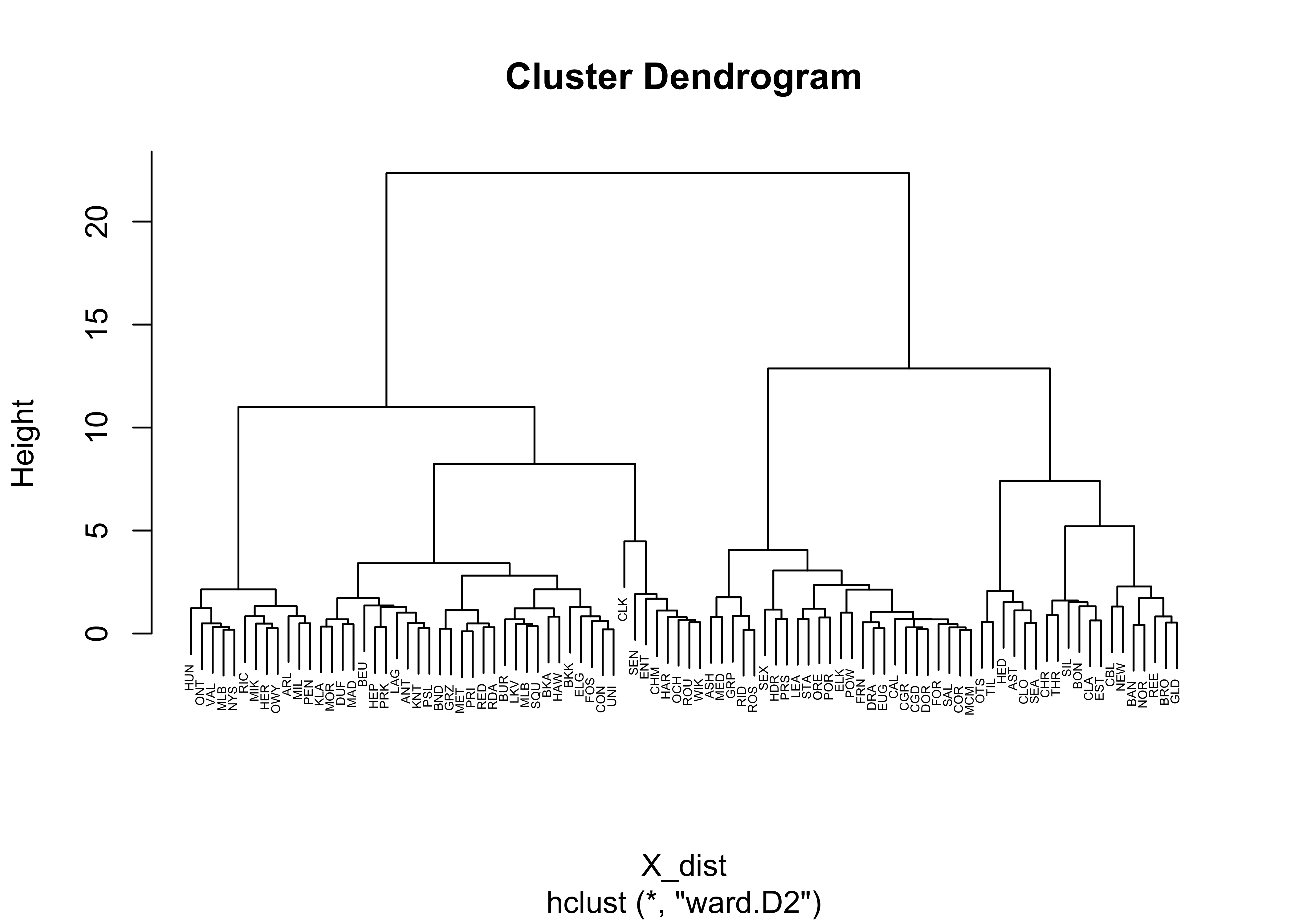

The function hclust() performs a “Wards’s

single-linkage” analysis, and the plot() function plots the

dendrogram. Notice that the individual “leaves” of the plot are labeled

by the climate-station names.

The dendrogram can be inspected to get an idea of how many distinct

clusters there are (and it can also identify unusual points–look for

Crater Lake CLK). As a first guess, let’s say there were

four distinct clusters evident in the dendrogram. The

cuttree() function can be used to “cut” the dendrogram at a

particular level:

# cut dendrogram and plot points in each cluster

nclust <- 4

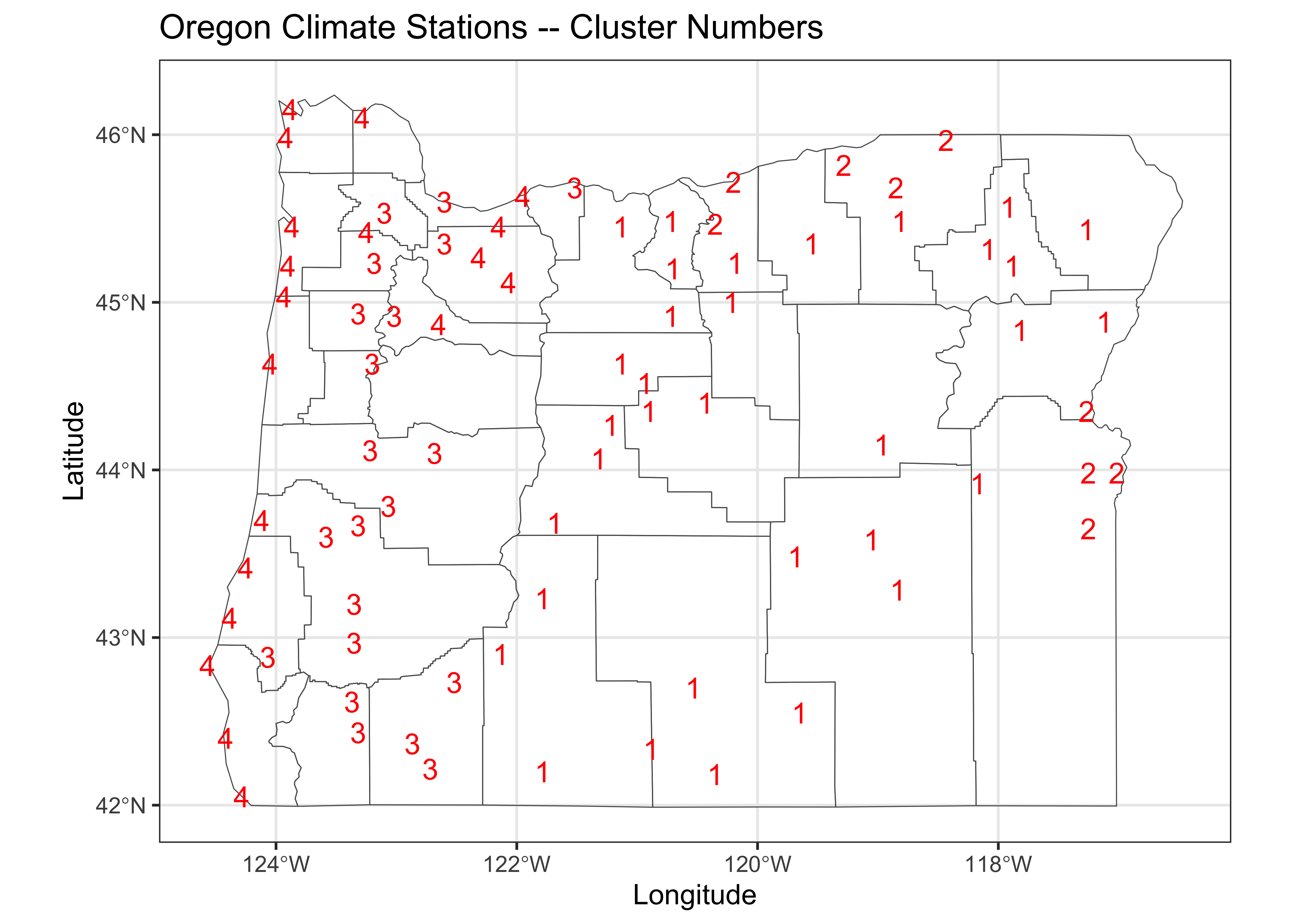

clusternum <- cutree(X_clusters, k=nclust)And then the cluster membership (i.e. which cluster?) of each station can be mapped.

# plot cluster memberships of each station

ggplot() +

geom_sf(data = or_otl_sf, fill = NA) +

geom_text(data = orstationc, aes(x =lon, y = lat, label = as.character(clusternum)),

check_overlap = TRUE, color = "red", size = 4) +

labs(title = "Oregon Climate Stations -- Cluster Numbers", x = "Longitude", y = "Latitude") +

theme_bw()

Cluster 1 picks out the stations in eastern Oregon’s “High Desert” region, while Cluster 2 highlights lower elevation stations in eastern Oregon. Cluster 3 includes stations in the valleys of western Oregon, while Cluster 4 indentifies coastal and lower Columbia Valley stations.

(A listing of the cluster number that each station belongs to can be gotten by the following code.)

2.3 Examining the cluster analysis

The efficacy of the analyis in regionalizing or grouping the stations can be gauged using plots and a analysis that looks at the “separation” of the groups of stations.

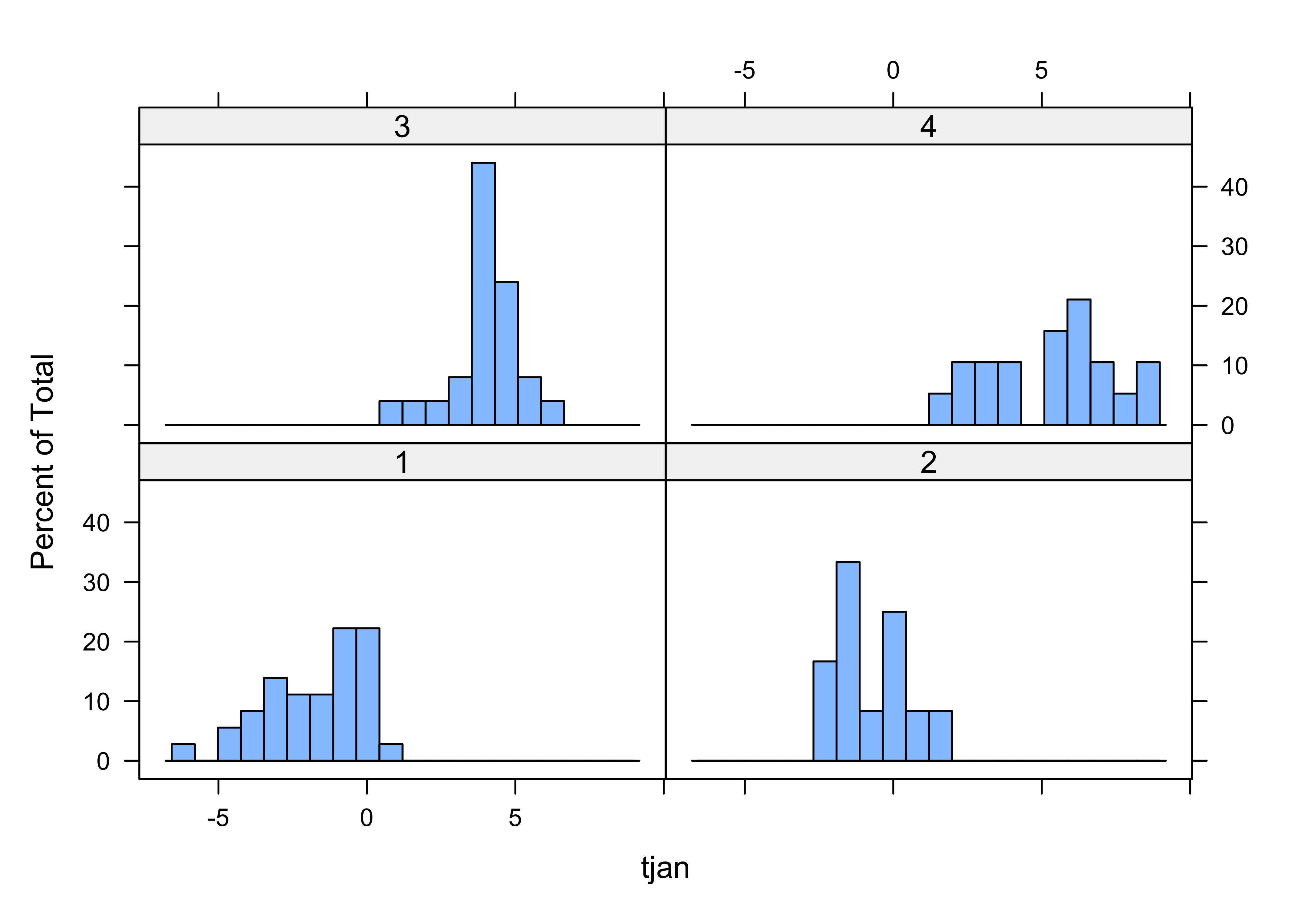

## 1 2 3 4

## -1.7166667 -0.7166667 4.0000000 5.3052632

Note how the histograms for some of the clusters seem multimodal multimodal–this is a clear sign of inhomogeneity of the clusters. Let’s see how the clusters differ. This can be done via discriminant analysis Discriminant Analysis, which (like PCA) defines some new variables with desirable properties, in this case, new variables that maximally “spread out” the groups along the new variable axes:

# discriminant analysis

cluster_lda1 <- lda(clusternum ~ tjan + tjul + tann + pjan + pjul + pann)

cluster_lda1## Call:

## lda(clusternum ~ tjan + tjul + tann + pjan + pjul + pann)

##

## Prior probabilities of groups:

## 1 2 3 4

## 0.3913043 0.1304348 0.2717391 0.2065217

##

## Group means:

## tjan tjul tann pjan pjul pann

## 1 -1.7166667 18.75556 8.038889 52.75000 9.944444 381.4722

## 2 -0.7166667 23.89167 11.225000 37.91667 6.166667 268.6667

## 3 4.0000000 19.36000 11.312000 186.64000 7.880000 1080.1600

## 4 5.3052632 15.95263 10.657895 316.52632 19.368421 1891.8947

##

## Coefficients of linear discriminants:

## LD1 LD2 LD3

## tjan -0.078899639 -0.2453913360 -1.615938671

## tjul 0.161194061 -0.7350202197 -1.079992203

## tann -0.588385785 0.2854664305 2.730086421

## pjan -0.012082646 -0.0113134200 -0.004373537

## pjul -0.015570218 0.0896595430 0.081419236

## pann -0.001246184 0.0007128788 0.001468877

##

## Proportion of trace:

## LD1 LD2 LD3

## 0.8257 0.1425 0.0318

Get the discriminant scores (the values of the new discriminant function):

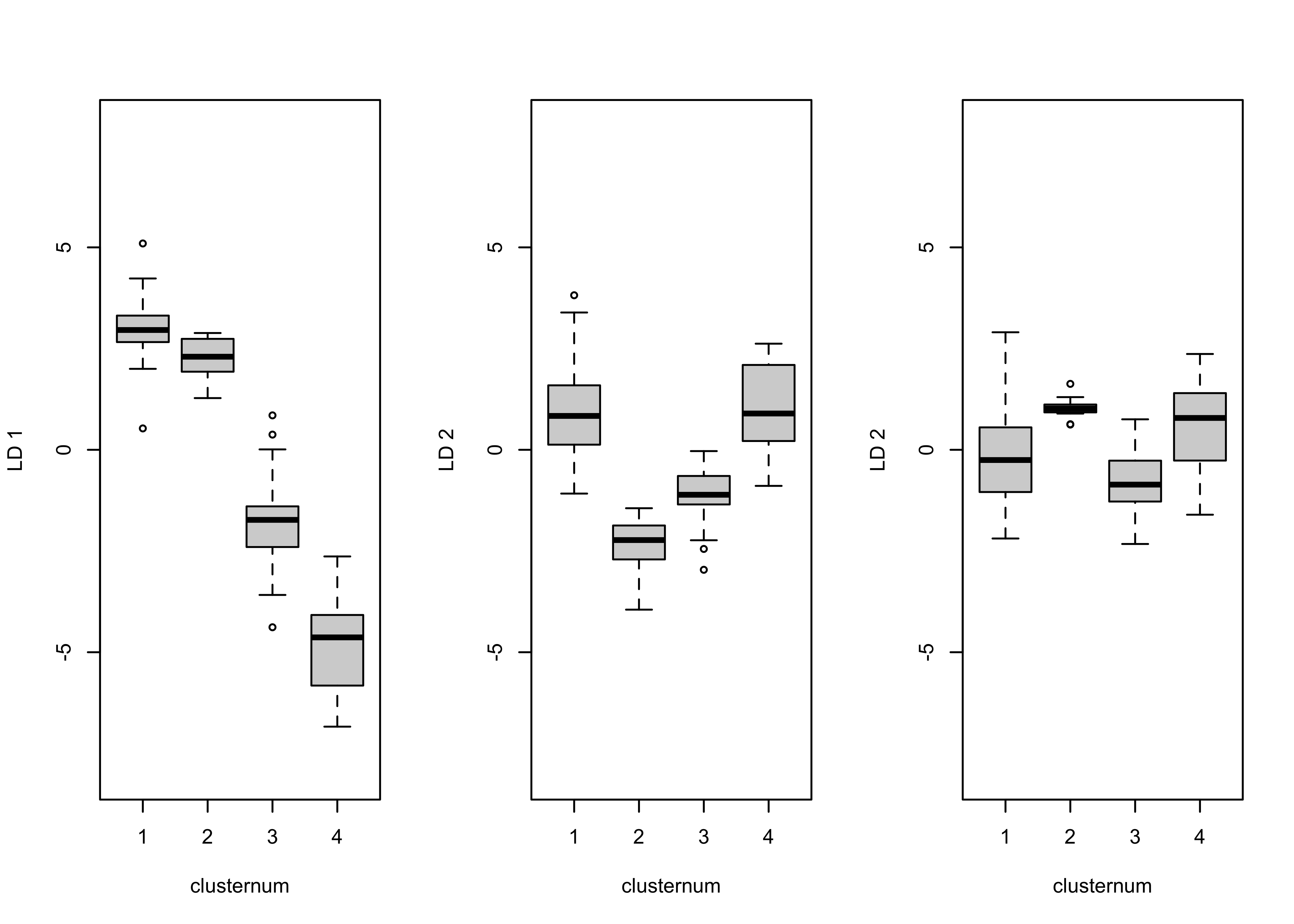

Produce a grouped box plot for each of first three discriminant function:

# residual grouped box plot

opar <- par(mfrow=c(1,3))

boxplot(cluster_dscore[, 1] ~ clusternum, ylab = "LD 1", ylim=c(-8,8))

boxplot(cluster_dscore[, 2] ~ clusternum, ylab = "LD 2", ylim=c(-8,8))

boxplot(cluster_dscore[, 3] ~ clusternum, ylab = "LD 2", ylim=c(-8,8))

The first discriminant function clearly spreads out the four clusters, while the second could be used to discriminate between clusters 1 and 4 and clusters 2 and 3.

Finally, get the discriminant function loadings, which measure the relationship or correlation between each original variables and the new discriminant function:

## LD1 LD2 LD3

## tjan -0.9196005 -0.1501351 -0.235122328

## tjul 0.4810736 -0.8198778 0.260104905

## tann -0.5795323 -0.7305824 0.063482600

## pjan -0.9632235 0.1729949 0.009078909

## pjul -0.4913887 0.5687402 0.492687963

## pann -0.9540390 0.2165052 0.059543444The loadings can be used to interpret or describe the discriminant functions. Here January temperature and January and annual precipitation are most highly correlated with the first discriminant function. Each of these have strong latitudinal gradient across the state: high in the west, low in the east. Note that this gradient corresponds well with a similar longitudinal gradient in cluster number.

The discriminant analysis here is used to answer the question “how do

the clusters of stations differ climatically?” In this case, it looks

like pann and tann are the variables most

closely correlated with each discriminant function, and because each of

these variables are more-or-less averages of the seasonal extreme

variables, that might explain why the clusters seem inhomogeneous.

The analysis can be repeated, extracting different numbers of clusters. The easiest way to do this is to copy the three chunks of code above into a text editor, changing the assignment to nclust and including or excluding before executing each chunk, one at a time.

3 Distances: among observations and among variables

As noted above, in addition to being the input to a cluster analysis,

distance matrices are interesting in their own right. In a typical

rectangular data set, there are two distance matrices that could be

calculated, one comparing variables (i.e. the columns in a data frame),

and the other comparing observations (or rows). In the examples below

the “TraCE 21ka” decadal data are used. There are (96 x 48) 4608 grid

points and 2204 observations. Read the raster brick in the netCDF file

(Trace21_TREFHT_anm2.nc):

# read the TraCE21 temperature data

# read TraCE21-ka transient climate-model simulation decadal data

tr21dec_path <- "/Users/bartlein/Projects/RESS/data/nc_files/"

tr21dec_name <- "Trace21_TREFHT_anm2.nc"

tr21dec_file <- paste(tr21dec_path, tr21dec_name, sep="")

dname <- "tas_anm_ann"

file_label <- "TraCE_decadal_"## File /Users/bartlein/Projects/RESS/data/nc_files/Trace21_TREFHT_anm2.nc (NC_FORMAT_CLASSIC):

##

## 5 variables (excluding dimension variables):

## float tas_anm_ann[lon,lat,time]

## name: tas_anm_ann

## long_name: tas anomalies

## units: C

## _FillValue: 1.00000003318135e+32

## comment: K transformed to degrees C

## float tas_anm_djf[lon,lat,time]

## name: tas_anm_djf

## long_name: tas anomalies

## units: C

## _FillValue: 1.00000003318135e+32

## comment: K transformed to degrees C

## float tas_anm_mam[lon,lat,time]

## name: tas_anm_mam

## long_name: tas anomalies

## units: C

## _FillValue: 1.00000003318135e+32

## comment: K transformed to degrees C

## float tas_anm_jja[lon,lat,time]

## name: tas_anm_jja

## long_name: tas anomalies

## units: C

## _FillValue: 1.00000003318135e+32

## comment: K transformed to degrees C

## float tas_anm_son[lon,lat,time]

## name: tas_anm_son

## long_name: tas anomalies

## units: C

## _FillValue: 1.00000003318135e+32

## comment: K transformed to degrees C

##

## 3 dimensions:

## lon Size:96

## name: lon

## long_name: Longitude

## units: degrees_east

## axis: X

## standard_name: longitude

## coodinate_defines: gridcell_center

## lat Size:48

## name: lat

## long_name: Latitude

## units: degrees_north

## axis: Y

## standard_name: latitude

## coodinate_defines: gridcell_center

## time Size:2204

## standard_name: time

## long_name: time

## units: ka BP

## axis: T

## calendar: noleap

## CoordinateAxisType: time

##

## 8 global attributes:

## title: TraCE 21 Transient Simulation

## model: CCSM3

## experiment: Trace 21

## references: Liu et al. (2009), Science 325:310-314

## history: P.J. Bartlein, 21 Aug 2014

## source_file: b30.00_4kaDVTd.cam2.ncrcat

## Conventions: CF-1.0

## comment: anomaly ltm base period: 1820, 1830, 1840lon <- ncvar_get(nc_in,"lon")

lat <- ncvar_get(nc_in,"lat")

t <- ncvar_get(nc_in, "time")

var_array <- ncvar_get(nc_in, dname)

dim(var_array)## [1] 96 48 2204The object plt_xvals is used to easily access time

labels for some of the later plots.

Reshape the 3-d array into a 2-d “tidy” matrix with individual variables (grid-point locations) in columns, and observations (time) in rows:

# reshape the 3-d array

nlon <- dim(var_array)[1]; nlat <- dim(var_array)[2]; nt <- dim(var_array)[3]

var_vec_long <- as.vector(var_array)

length(var_vec_long)## [1] 10156032## [1] 2204 46083.1 Distances among times

Notice the use of the transposition function (t()) to

get the data in the proper “tidy” arrangement for a data frame

(variables in columns, observations in rows). Distance matrix

calculation can take a while, but the {parallelDist}

package can be used to greatly speed things up, by handing off the

creation of different blocks of the matrix to different processors in a

multi-processor machine.

The parDist() function gets the dissimilarity

matrix:

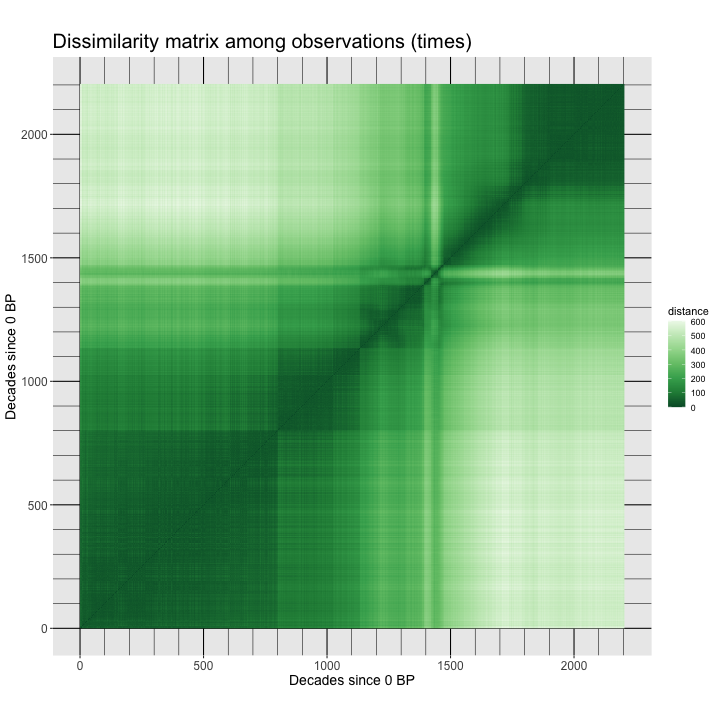

## [1] 2204 2204The dissimilarity matrix (dist_obs) can be conveniently

visualized by turning it into a {terra} SpatRaster, and

then using the {tidyterra} geom_spatraster()

function to visualize it. The rendering of the image is faster if the

image is written to a file:

## class : SpatRaster

## dimensions : 2204, 2204, 1 (nrow, ncol, nlyr)

## resolution : 1, 1 (x, y)

## extent : 0, 2204, 0, 2204 (xmin, xmax, ymin, ymax)

## coord. ref. :

## source(s) : memory

## name : lyr.1

## min value : 0.0000

## max value : 619.0497Use ggplot2() to plot the matrix (writing the *.png

image to a file):

# plot the dissimilarity matrix

png(paste(file_label, "dist_obs_v1.png", sep=""), width=720, height=720) # open the file

ggplot() +

geom_spatraster(data = t(dist_obs_terra)) +

scale_fill_distiller(type = "seq", palette = "Greens") +

scale_x_continuous(breaks = seq(0, 2200, by=500), minor_breaks = seq(0, 2200, by=100)) +

scale_y_continuous(breaks = seq(0, 2200, by=500), minor_breaks = seq(0, 2200, by=100)) +

labs(title = "Dissimilarity matrix among observations (times)",

x = "Decades since 0 BP", y = "Decades since 0 BP", fill="distance") +

theme(panel.grid.major=element_line(colour="black"), panel.grid.minor=element_line(colour="black"),

axis.text=element_text(size=12), axis.title=element_text(size=14), aspect.ratio = 1,

plot.title = element_text(size=20))

dev.off()Here’s the image:

The observations here are ordered in time, and the blocks in the distance matrix indicate that there are two distinct climate regimes: one from the beginning of the record to around 14.7 ka (14,700 years before 1950 CE, e.g. in decade 1470 before the end of the record at 0 BP). Within each major interval, there are subintervals indicated by the dark squares. For example during the Holocene (the past 11,700 years, there are two blocks, the early Holocene, 11,700 to 8200 yr BP, and the middle and late Holocene, after 8200 yr BP). There are also patterns within the blocks. During the interval from 14,700 to 11,700 yr BP, the warm, but variable, Bølling-Allerød from 14,700 yr BP to 12,900 yr BP, can be seen followed by the colder Younger Dryas interval, 12,900 to 11,700 yr BP.

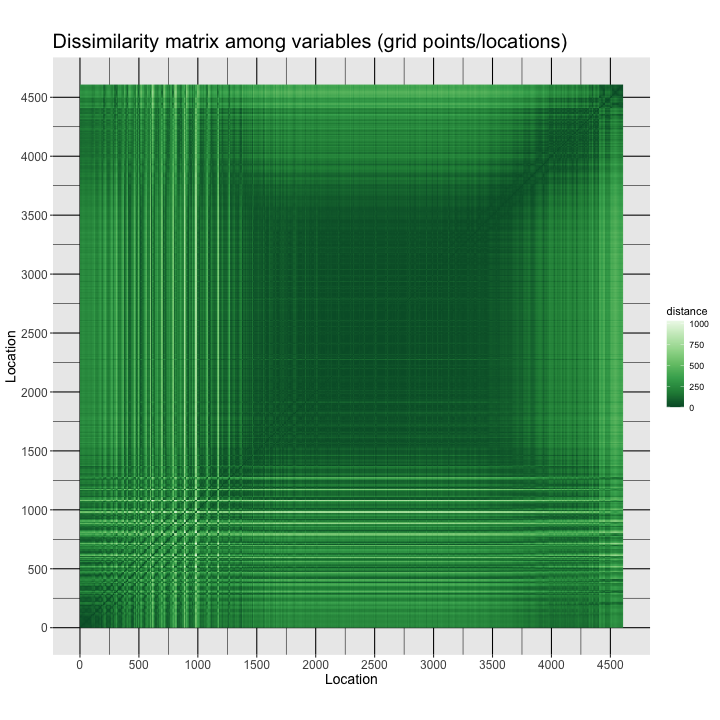

3.2 Distance among locations

The dissimilarities among locations/variables can be gotten in a

similar fashion, but note the use of the transpose function

t() to get the variables in rows, and observations in

columns:

## [1] 4608 4608## class : SpatRaster

## dimensions : 4608, 4608, 1 (nrow, ncol, nlyr)

## resolution : 1, 1 (x, y)

## extent : 0, 4608, 0, 4608 (xmin, xmax, ymin, ymax)

## coord. ref. :

## source(s) : memory

## name : lyr.1

## min value : 0.000

## max value : 1058.149Plot the matrix:

# plot the dissimilarity matrix

png(paste(file_label, "dist_var_v1.png", sep=""), width=720, height=720) # open the file

ggplot() +

geom_spatraster(data = t(dist_var_terra)) +

scale_fill_distiller(type = "seq", palette = "Greens") +

scale_x_continuous(breaks = seq(0, 4600, by=500), minor_breaks = seq(0, 4600, by=250)) +

scale_y_continuous(breaks = seq(0, 4600, by=500), minor_breaks = seq(0, 4600, by=250)) +

labs(title = "Dissimilarity matrix among variables (grid points/locations)",

x = "Location", y = "Location", fill="distance") +

theme(panel.grid.major=element_line(colour="black"), panel.grid.minor=element_line(colour="black"),

axis.text=element_text(size=12), axis.title=element_text(size=14), aspect.ratio = 1,

plot.title = element_text(size=20))

dev.off()

The image is a sort of map: The grid points are arranged in the data frame with longitudes varying rapidly, and the latitudes slowly (forming the small squares in the image), such that the square dark area (low dissimilarities) in the middle is the tropics, where the spatial variability of climate is low, while the more spatially variable higher-latitude regions (at all longitudes) frame the low-variability region. There is also a pronounced Northern Hemisphere/Southern Hemisphere contrast

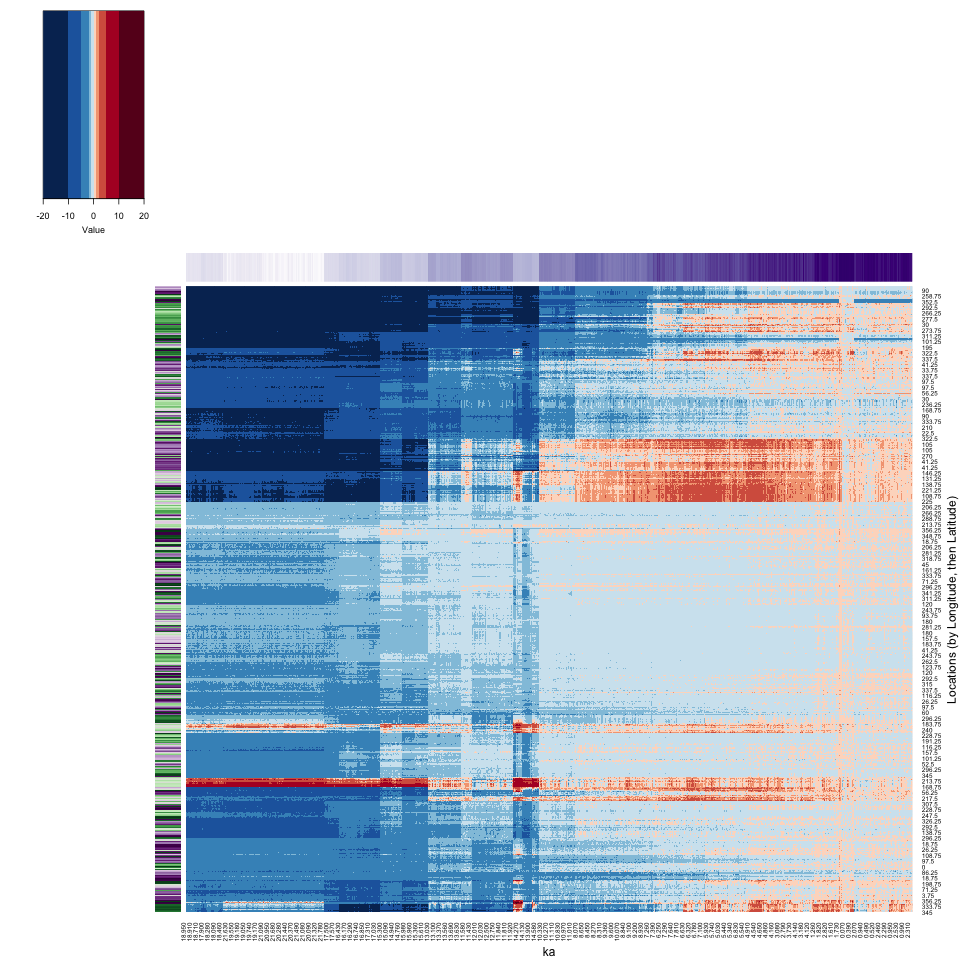

4 Heatmaps (2-way, time-and-space clustering)

“Heatmaps” are a visualization approach that is implemented in the

{base} package of R, but is extended by the

{gplots} package. A heatmap basically consists of the

raster-image type plot of the data frame, with the rows and columns of

the matrix rearranged using cluster analyses of the varables and

observations. (Note that the cluster analyses are carried out

sequentially, as opposed to simultaneously, this latter approach known

as “biclustering”.) Typically, for small data sets, the matrix plot is

augmented by dendrograms, and by “side bars” which plot some additional

attribute of the data (location or time, for example) using a scatter

plot, gradient plot, etc.

4.1 A simple example with Hovmöller-matrix data

A simple heatmap can be generated for a “Hovmöller matrix” calculated using the gridded data. The Hovmöller matrix contains longitudinal averages, and is laid out with one row for each latitude and one column for each time.

4.1.1 Set up

Create the Hovmöller matrix:

## [1] 48 2204for (n in (1:nt)) {

for (k in (1:nlat)) {

for (j in (1:nlon)) {

Y[k, n] <- Y[k, n] + var_array[j, k, n]

}

Y[k, n] <- Y[k, n]/nlon

}

}

dim(Y)## [1] 48 2204The Hovmöller matrix could also have been gotten using the

apply() function:

Y <- matrix(0.0, nrow=nlat, ncol=nt)

dim(Y)

Y <- apply(var_array, c(2,3), mean, na.rm=TRUE)

dim(Y)

ncols <- dim(Y)[2]Set row and column names for the matrix.

# set row and column names

rownames(Y) <- str_pad(sprintf("%.3f", lat), 5, pad="0")

head(rownames(Y));tail(rownames(Y)); length(rownames(Y))## [1] "-87.159" "-83.479" "-79.777" "-76.070" "-72.362" "-68.652"## [1] "68.652" "72.362" "76.070" "79.777" "83.479" "87.159"## [1] 48colnames(Y) <- str_pad(sprintf("%.3f", plt_xvals), 5, pad="0")

head(colnames(Y));tail(colnames(Y)); length(colnames(Y))## [1] "22.000" "21.990" "21.980" "21.970" "21.960" "21.950"## [1] "0.020" "0.010" "0.000" "-0.010" "-0.020" "-0.030"## [1] 2204A matrix plot of the Hovmöller matrix will illustrate the data (and is an actual Hovmöller plot). To get high (northern) latitudes at the top of the plot, and high (southern) latitudes at the bottom, flip the data:

## [1] "87.159" "83.479" "79.777" "76.070" "72.362" "68.652"## [1] "-68.652" "-72.362" "-76.070" "-79.777" "-83.479" "-87.159"## [1] 48For this example, the data are subsampled, by taking every 20th observation (to allow the dendrograms to be visualized):

## [1] 48 111## [1] "87.159" "83.479" "79.777" "76.070" "72.362" "68.652"## [1] "22.000" "21.800" "21.600" "21.400" "21.200" "21.000"More set up. Generate colors for the matrix pot and the side bars, which here will illustrate the latitude and time of each data point. The rows will be labeled by a diverging color scale, purple for the Northern Hemisphere, green for the Southern Hemisphere, while time will be labeled from past to present by increasingly saturated purples.

# generate colors for side bars

rcol <- colorRampPalette(brewer.pal(10,"PRGn"))(nlat)

ccol <- colorRampPalette(brewer.pal(9,"Purples"))(ncols)

ccol2 <- colorRampPalette(brewer.pal(9,"Purples"))(ncols2)Generate colors for the heatmap of temperature

nclr <- 10

zcol <- (rev(brewer.pal(nclr,"RdBu")))

breaks = c(-20,-10,-5,-2,-1,0,1,2,5,10,20)

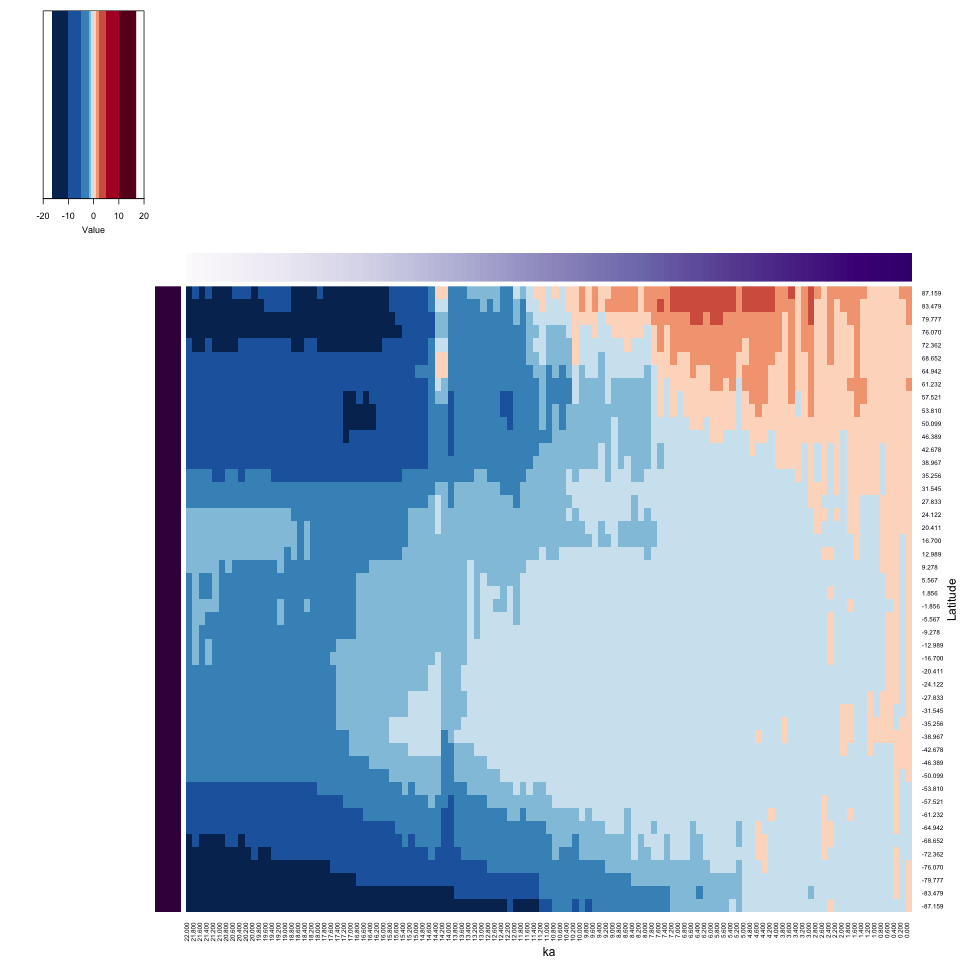

length(breaks)## [1] 114.1.2 Hovmöller-matrix plot (subsetted decadal data)

Produce an unclustered heatmap for the Hovmöller matrix. Note that

the system.time() function is being used here to gauge the

time it takes to calculate and display the various heatmaps.

# unclustered heatmap of latitude by time Hovmöller matrix

time1 <- proc.time()

png_file <- paste(file_label, "hov_01", ".png", sep="")

png(png_file, width=960, height=960)

Y2_heatmap <- heatmap.2(Y2, cexRow = 0.8, cexCol = 0.8, scale="none",

Rowv=NA, Colv=NA, dendrogram = "none", # just plot data, don't do clustering

RowSideColors=rcol, ColSideColors=ccol2, col=zcol, breaks=breaks, trace="none", density.info = "none",

lmat=rbind(c(6, 0, 5), c(0, 0, 2), c(4, 1, 3)), lwid=c(1.0, 0.2, 5.0), lhei = c(1.5, 0.2, 4.0),

xlab = "ka", ylab = "Latitude", key = TRUE, key.title = NA)

dev.off()

print (proc.time() - time1)## user system elapsed

## 0.076 0.025 0.264

Notice the way the side bars work in illustrating the location and time of each data value. The Hovmöller plot nicely shows the latitudinal variations in climate from the Last Glacial Maximum to present, with the insolation-driven warming in the Northern Hemisphere clearly expressed.

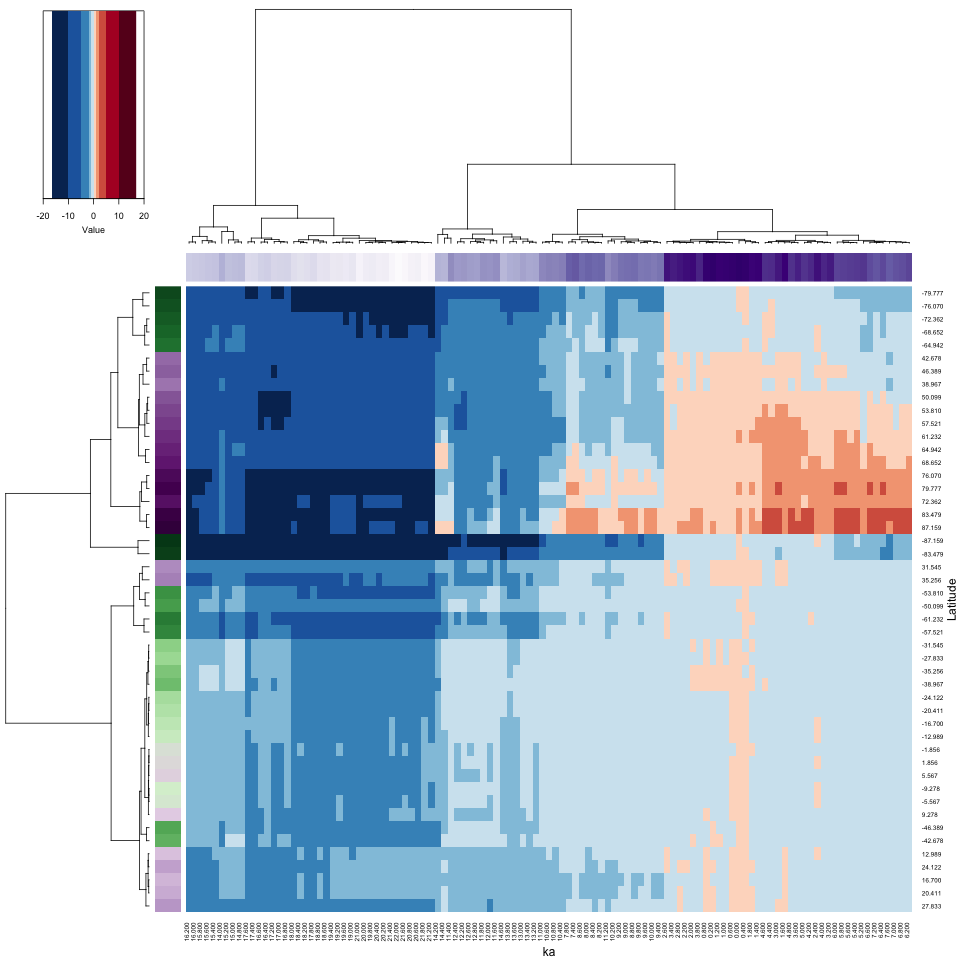

4.1.3 Hovmöller-matrix heatmap (subsetted decadal data)

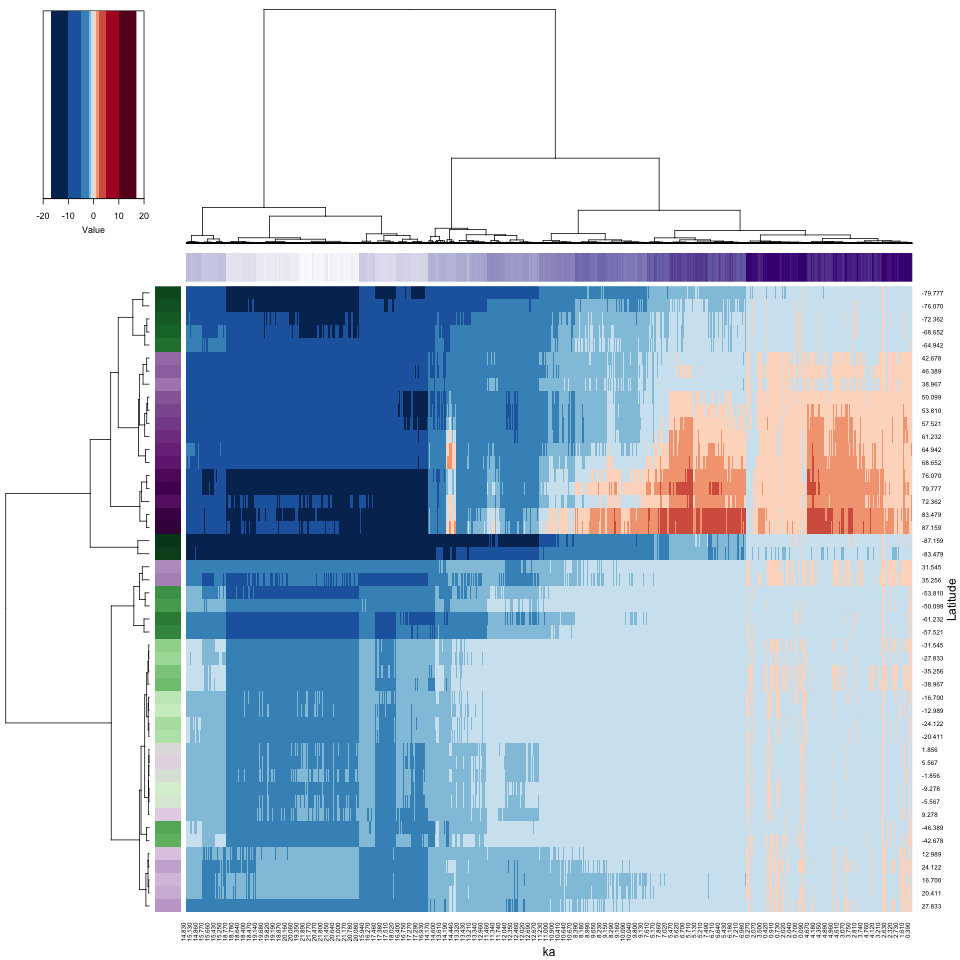

The heatmap of the Hovmöller-matrix reorganizes the rows and columns in such a way as to place variables (i.e. latitudes) with similar variations over time, and observations (times) with similar latitudinal patterns, next to one another. The row and column clustering will be done explicitly, in order to “reorder” the dendrograms, which could aid in their interpretability.

# heatmap of Hovmöller matrix

time1 <- proc.time()

png_file <- paste(file_label, "heatmap_01", ".png", sep="")

png(png_file, width=960, height=960)

# row and column clustering

cluster_row <- hclust(parDist(Y2), method = "ward.D2")

cluster_col <- hclust(parDist(t(Y2)), method = "ward.D2")

# heatmap

Y2_heatmap <- heatmap.2(Y2, cexRow = 0.8, cexCol = 0.8, scale="none",

Rowv = as.dendrogram(cluster_row),

Colv = as.dendrogram(cluster_col),

RowSideColors=rcol, ColSideColors=ccol2, col=zcol, breaks=breaks, trace="none", density.info = "none",

lmat=rbind(c(6, 0, 5), c(0, 0, 2), c(4, 1, 3)), lwid=c(1.0, 0.2, 5.0), lhei = c(1.5, 0.2, 4.0),

xlab = "ka", ylab = "Latitude", key = TRUE, key.title = NA)

dev.off()

print (proc.time() - time1)## user system elapsed

## 0.225 0.058 0.490

The heatmap has rearranged the data according to the cluster analyses of the observations and variables, placing more similar data points next to or near to one another. Notice how the colored side bars allow one to figure out the location and time of individual points. Here, and not surprisingly, there appear to be four distinct time-space clusters (or “regimes”) in the data): glacial vs. interglacial (Holocene) and high latitudes (in both hemispheres) vs. low latitudes. Notice that the rearrangement in time is relatively modest (see the column color bar), while the rearrangement in location is more dramatic. On the row color bar, high latitudes are represented by more saturated colors. Spatial variations of climate are always larger in amplitude than temporal variations.

The purple column color bar suggests that the reorganized columns are

almost in chronological order, while the colorbar for the rows shows a

distinct high-latitude/low-latitude contrast. Because dendrograms are

like a decorative mobile, and can be rotated without destroying the

cluster or branch mememberships, it’s sometimes useful to try that. This

can be done with the reorder.dendrogram() (or just

reorder()), with the cluster information and set of weights

as input arguments.

# reordered heatmap of Hovmöller matrix

time1 <- proc.time()

png_file <- paste(file_label, "heatmap_01b", ".png", sep="")

png(png_file, width=960, height=960)

# heatmap

Y2_heatmap <- heatmap.2(Y2, cexRow = 0.8, cexCol = 0.8, scale="none",

Rowv = reorder(as.dendrogram(cluster_row), 1:dim(Y2)[1]),

Colv = reorder(as.dendrogram(cluster_col), 1:dim(Y2)[2]),

RowSideColors=rcol, ColSideColors=ccol2, col=zcol, breaks=breaks, trace="none", density.info = "none",

lmat=rbind(c(6, 0, 5), c(0, 0, 2), c(4, 1, 3)), lwid=c(1.0, 0.2, 5.0), lhei = c(1.5, 0.2, 4.0),

xlab = "ka", ylab = "Latitude", key = TRUE, key.title = NA)

dev.off()

print (proc.time() - time1)

In this instance, the reordering of the dendrograms didn’t accomplish much.

4.2 A second example with decadal data

A second example uses the decadal data directly (as opposed to subsampling)

4.2.1 Hovmöller-matrix plot

# Hovmöller-matrix plot

time1 <- proc.time()

png_file <- paste(file_label, "hov_02", ".png", sep="")

png(png_file, width=960, height=960)

Y_heatmap <- heatmap.2(Y, cexRow = 0.8, cexCol = 0.8, scale="none",

Rowv=NA, Colv=NA, dendrogram = "none", # just plot data, don't do clustering

RowSideColors=rcol, ColSideColors=ccol, col=zcol, breaks = breaks, trace="none", density.info = "none",

lmat=rbind(c(6, 0, 5), c(0, 0, 2), c(4, 1, 3)), lwid=c(1.0, 0.2, 5.0), lhei = c(1.5, 0.2, 4.0),

xlab = "ka", ylab = "Latitude", key = TRUE, key.title = NA)

dev.off()

print (proc.time() - time1)## user system elapsed

## 35.070 0.091 35.195

4.2.2 Hovmöller-matrix heatmap

# Hovmöller-matrix heatmap

time1 <- proc.time()

png_file <- paste(file_label, "heatmap_02", ".png", sep="")

png(png_file, width=960, height=960)

cluster_row <- hclust(parDist(Y), method = "ward.D2")

cluster_col <- hclust(parDist(t(Y)), method = "ward.D2")

# heatmap

Y_heatmap <- heatmap.2(Y, cexRow = 0.8, cexCol = 0.8, scale="none",

Rowv = as.dendrogram(cluster_row),

Colv = as.dendrogram(cluster_col),

RowSideColors=rcol, ColSideColors=ccol, col=zcol, breaks=breaks, trace="none", density.info = "none",

lmat=rbind(c(6, 0, 5), c(0, 0, 2), c(4, 1, 3)), lwid=c(1.0, 0.2, 5.0), lhei = c(1.5, 0.2, 4.0),

xlab = "ka", ylab = "Latitude", key = TRUE, key.title = NA)

dev.off()

print (proc.time() - time1)## user system elapsed

## 1.582 0.073 1.657

The results are similar to those for the subsetted data, but the execution times are longer.

4.3 Full-grid example

Finally, a heatmap of the actual gridded data (as opposed to the Hovmöller matrix data) can be obtained. The next set of plots will display values for each of the 4608 grid points and 2204 time steps, over ten million points, over ten times the number of pixels in the output *.png files (960 x 960). We’ll trust the graphics subsystem in R along with whatever web browser is being used to view the images to get it right.

4.3.1 Set up

There are several set-up steps necessary. Reshape the input array again, and generate row and column names:

## [1] 10156032X <- matrix(var_vec_long, nrow = nlon * nlat, ncol = nt) # Don't transpose as usual this time

dim(X)## [1] 4608 2204# generate row and column names

grid <- expand.grid(lon, lat)

names(grid) <- c("lon", "lat")

# rownames(X) <- paste("E", str_pad(sprintf("%.2f", round(grid$lon, 2)), 5, pad="0"),

# "N", str_pad(sprintf("%.2f", round(grid$lat, 2)), 5, pad="0"), sep="")

rownames(X) <- round(grid$lat, 2)

head(rownames(X), 20); tail(rownames(X), 20); length(rownames(X))## [1] "-87.16" "-87.16" "-87.16" "-87.16" "-87.16" "-87.16" "-87.16" "-87.16" "-87.16" "-87.16" "-87.16"

## [12] "-87.16" "-87.16" "-87.16" "-87.16" "-87.16" "-87.16" "-87.16" "-87.16" "-87.16"## [1] "87.16" "87.16" "87.16" "87.16" "87.16" "87.16" "87.16" "87.16" "87.16" "87.16" "87.16" "87.16"

## [13] "87.16" "87.16" "87.16" "87.16" "87.16" "87.16" "87.16" "87.16"## [1] 4608colnames(X) <- str_pad(sprintf("%.3f", t), 5, pad="0")

head(colnames(X)); tail(colnames(X)); length(colnames(X))## [1] "22.000" "21.990" "21.980" "21.970" "21.960" "21.950"## [1] "0.020" "0.010" "0.000" "-0.010" "-0.020" "-0.030"## [1] 2204Flip the data…

## [1] "87.16" "87.16" "87.16" "87.16" "87.16" "87.16"## [1] "-87.16" "-87.16" "-87.16" "-87.16" "-87.16" "-87.16"## [1] 4608… and generate colors:

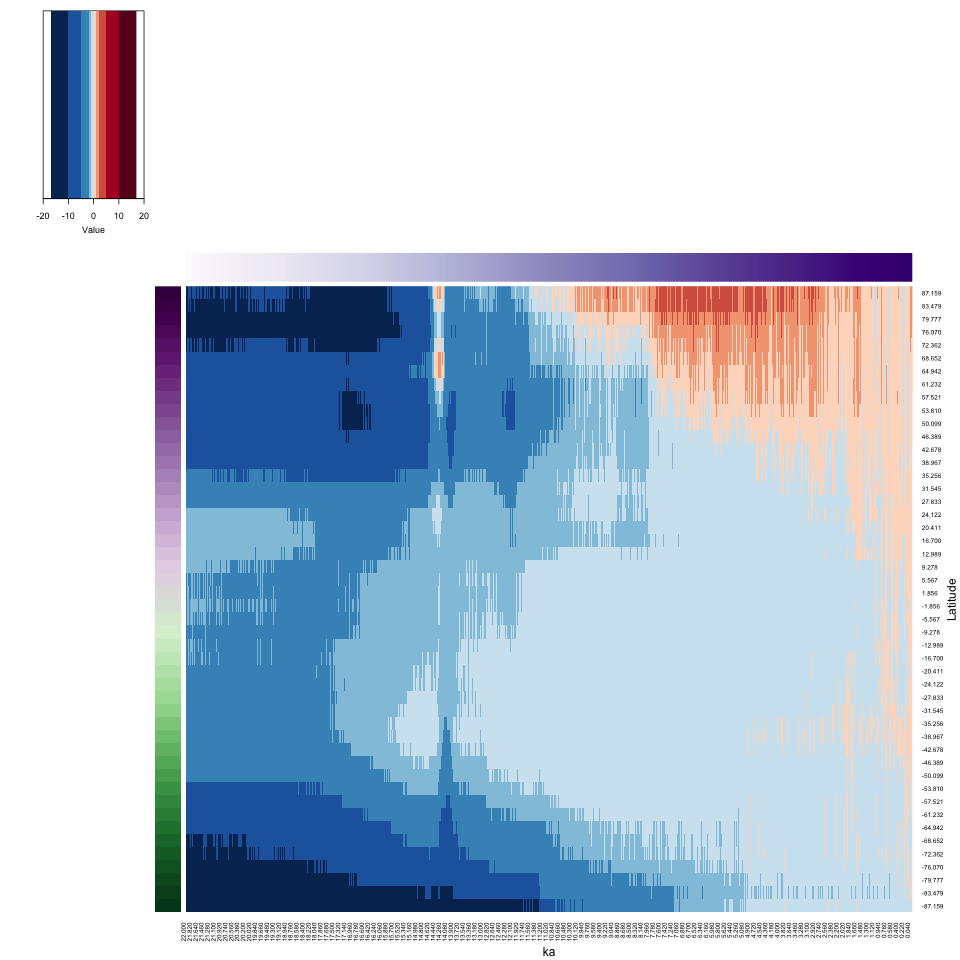

4.3.2 Matrix plot – full grid

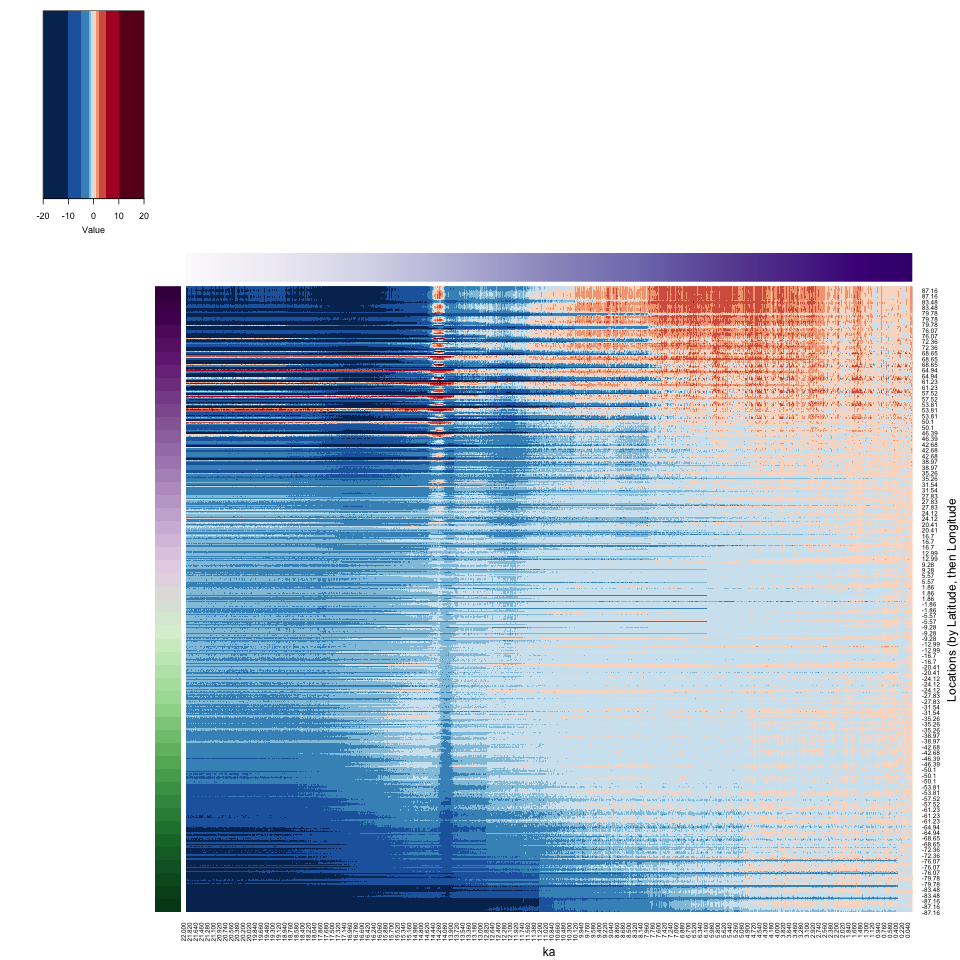

Here’s a matrix plot of the data (there are too many points to make the dendrograms interpretable in any way, and so they are suppressed).

# matrix plot of the full dataset

time1 <- proc.time()

png_file <- paste(file_label, "hov_03", ".png", sep="")

png(png_file, width=960, height=960)

X_heatmap <- heatmap.2(X, cexRow = 0.8, cexCol = 0.8, scale="none",

Rowv=NA, Colv=NA, dendrogram = "none",

RowSideColors=rcol, ColSideColors=ccol, col=zcol, breaks=breaks, trace="none", density.info = "none",

lmat=rbind(c(6, 0, 5), c(0, 0, 2), c(4, 1, 3)), lwid=c(1.0, 0.2, 5.0), lhei = c(1.5, 0.2, 4.0),

xlab = "ka", ylab = "Locations (by Latitude, then Longitude", key = TRUE, key.title = NA)

dev.off()

print (proc.time() - time1)## user system elapsed

## 802.255 6.391 814.750

The dimensions of the input netCDF file (96 longitudes x 48 latitudes x 2204 times) are such that when the 3-d arrays is reshaped, the longitudes vary most rapidly, followed by the latitudes and times. This gives rise to the horizontal banding in the image, with 48 bands organized from top to bottom. Within each band, the longitudes vary from top (west) to bottom (east). The N. Pacific/Beringia warm patch during glacial times (related to the influece of the North American Ice Sheet) is evident as the thin streaks of higher temperatures in the upper left corner of the image.

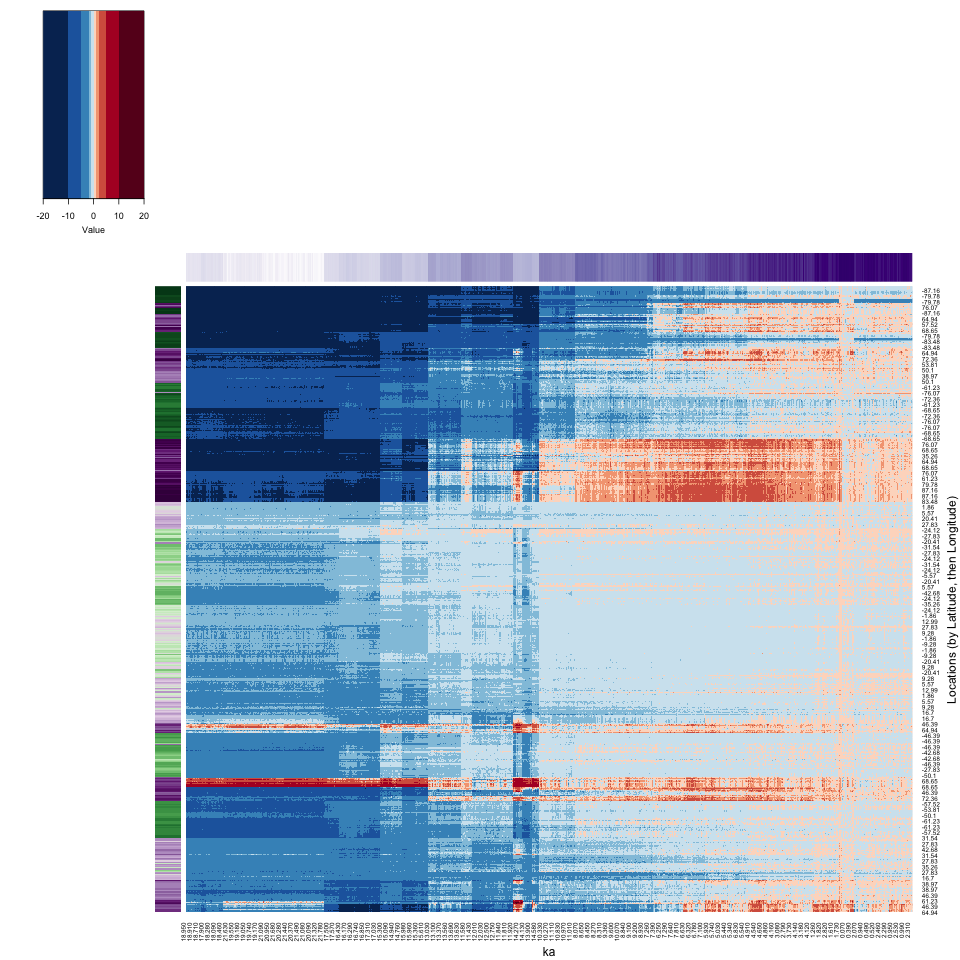

4.3.3 Heatmap (Locations (by Latitude, then Longitude))

Now here is the reorganized heatmap of the grid-point data:

# Heatmap (Locations (by Latitude, then Longitude))

time1 <- proc.time()

png_file <- paste(file_label, "heatmap_03", ".png", sep="")

png(png_file, width=960, height=960)

cluster_row <- hclust(parDist(X), method = "ward.D2")

cluster_col <- hclust(parDist(t(X)), method = "ward.D2")

# heatmap

X_heatmap <- heatmap.2(X, cexRow = 0.8, cexCol = 0.8, scale="none", dendrogram = "none",

Rowv = as.dendrogram(cluster_row),

Colv = as.dendrogram(cluster_col),

RowSideColors=rcol, ColSideColors=ccol, col=zcol, breaks=breaks, trace="none", density.info = "none",

lmat=rbind(c(6, 0, 5), c(0, 0, 2), c(4, 1, 3)), lwid=c(1.0, 0.2, 5.0), lhei = c(1.5, 0.2, 4.0),

xlab = "ka", ylab = "Locations (by Latitude, then Longitude)", key = TRUE, key.title = NA)

dev.off()

print (proc.time() - time1)## user system elapsed

## 1086.889 11.852 124.951

There is a lot going on in the full-grid heatmap.

Let’s see if reordering would contribute to the interpretability.

# reordered reorganized heat map

time1 <- proc.time()

png_file <- paste(file_label, "heatmap_03b", ".png", sep="")

png(png_file, width=960, height=960)

# heatmap

X_heatmap <- heatmap.2(X, cexRow = 0.8, cexCol = 0.8, scale="none", dendrogram = "none",

Rowv = reorder(as.dendrogram(cluster_row), 1:dim(X)[1]),

Colv = reorder(as.dendrogram(cluster_col), 1:dim(X)[2]),

RowSideColors=rcol, ColSideColors=ccol, col=zcol, breaks=breaks, trace="none", density.info = "none",

lmat=rbind(c(6, 0, 5), c(0, 0, 2), c(4, 1, 3)), lwid=c(1.0, 0.2, 5.0), lhei = c(1.5, 0.2, 4.0),

xlab = "ka", ylab = "Locations (by Latitude, then Longitude)", key = TRUE, key.title = NA)

dev.off()

print (proc.time() - time1)

The reorganization seems to contrast low-variability (top) and high-variability locations and times (bottom).

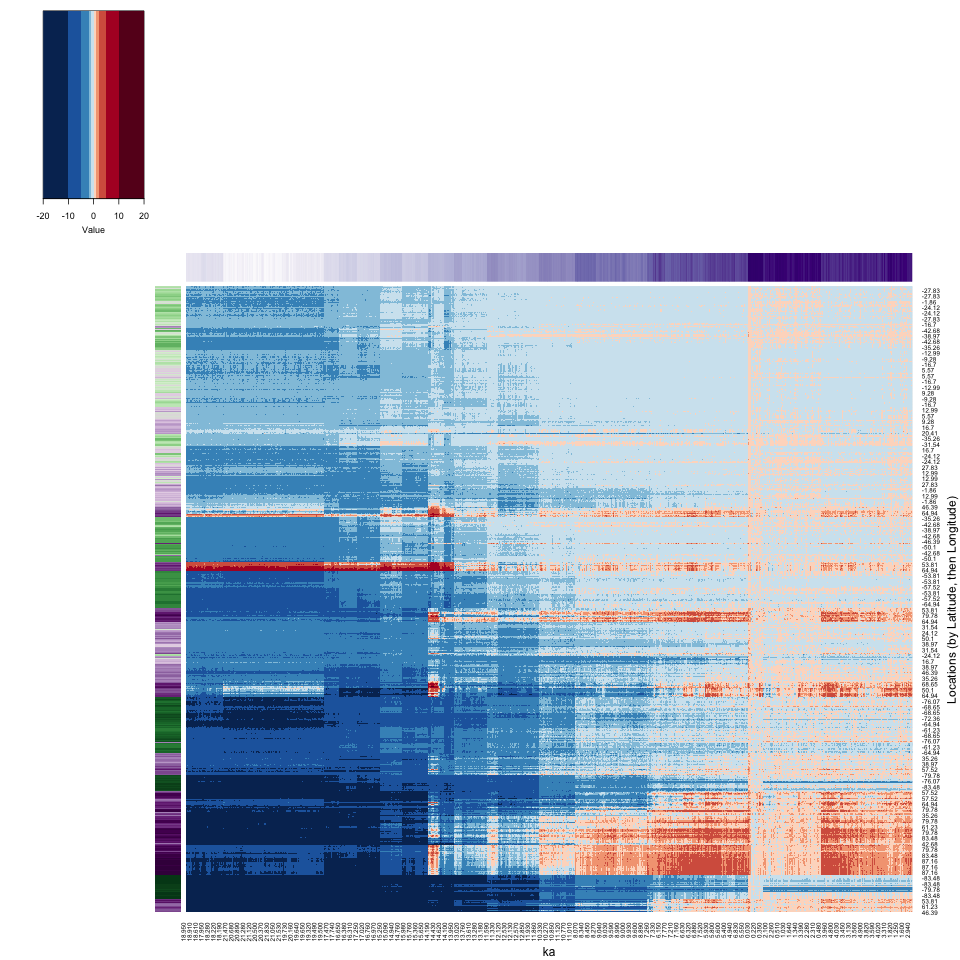

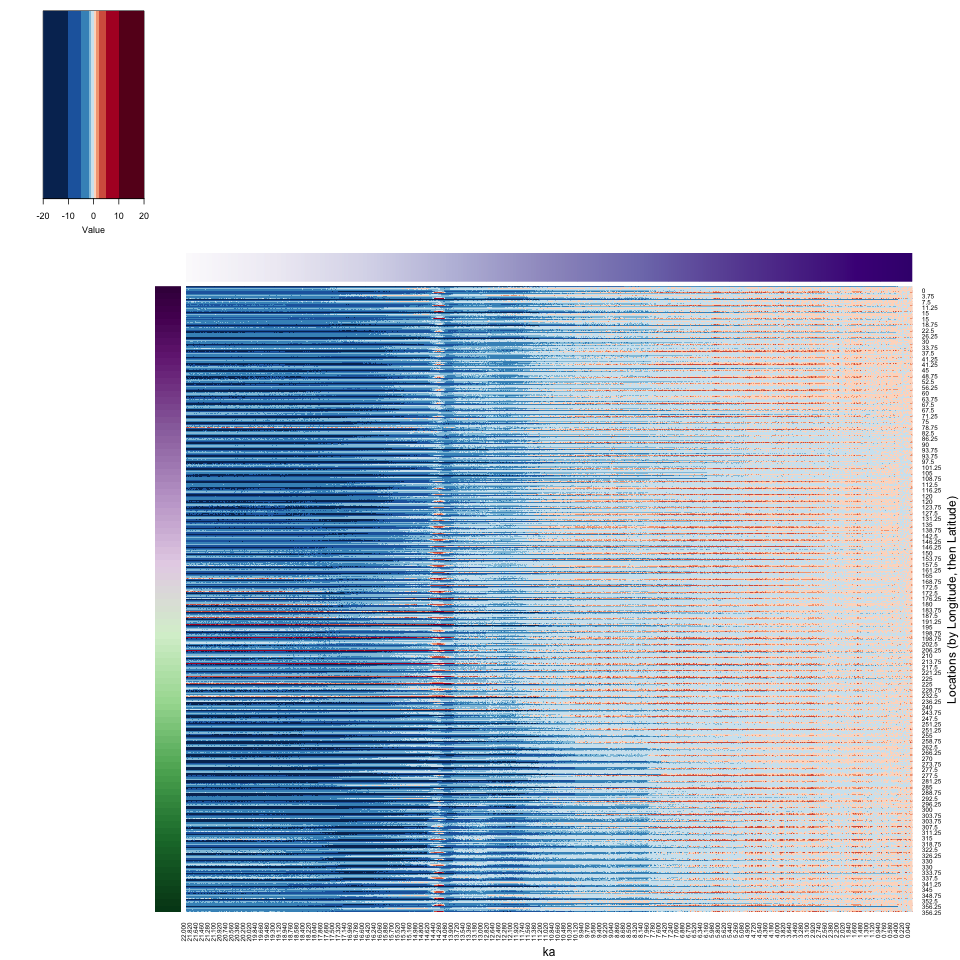

4.3.4 Heatmap (Locations (by Longitude, then Latitude))

## [1] 96 48 2204var_array2 <- array(NA, dim = c(48, 96, 2204))

for (k in (1:nlat)) {

for (j in (1:nlon)) {

var_array2[k, j, 1:nt] <- var_array[j, k, 1:nt]

}

}

dim(var_array2)## [1] 48 96 2204## [1] 10156032X2 <- matrix(var_vec_long2, nrow = nlat * nlon, ncol = nt) # Don't transpose as usual this time

dim(X2)## [1] 4608 2204# generate row and column names

grid <- expand.grid(lat, lon)

names(grid) <- c("lat", "lon")

rownames(X2) <- paste("N", str_pad(sprintf("%.2f", round(grid$lat, 2)), 5, pad="0"),

"E", str_pad(sprintf("%.2f", round(grid$lon, 2)), 5, pad="0"), sep="")

rownames(X2) <- round(grid$lon, 2)

head(rownames(X2), 20); tail(rownames(X2), 20); length(rownames(X2))## [1] "0" "0" "0" "0" "0" "0" "0" "0" "0" "0" "0" "0" "0" "0" "0" "0" "0" "0" "0" "0"## [1] "356.25" "356.25" "356.25" "356.25" "356.25" "356.25" "356.25" "356.25" "356.25" "356.25" "356.25"

## [12] "356.25" "356.25" "356.25" "356.25" "356.25" "356.25" "356.25" "356.25" "356.25"## [1] 4608colnames(X2) <- str_pad(sprintf("%.3f", t), 5, pad="0")

head(colnames(X2)); tail(colnames(X2)); length(colnames(X2))## [1] "22.000" "21.990" "21.980" "21.970" "21.960" "21.950"## [1] "0.020" "0.010" "0.000" "-0.010" "-0.020" "-0.030"## [1] 2204# generate colors for vertical and horizontal side bars

icol <- colorRampPalette(brewer.pal(10,"PRGn"))(nlon)

idx <- sort(rep((1:nlon), nlat))

rcol <- icol[idx]

ccol <- colorRampPalette(brewer.pal(9,"Purples"))(nt)time1 <- proc.time()

png_file <- paste(file_label, "hov_04", ".png", sep="")

png(png_file, width=960, height=960)

# heatmap

X2_heatmap <- heatmap.2(X2, cexRow = 0.8, cexCol = 0.8, scale="none",

Rowv=NA, Colv=NA, dendrogram = "none",

RowSideColors=rcol, ColSideColors=ccol, col=zcol, breaks=breaks, trace="none", density.info = "none",

lmat=rbind(c(6, 0, 5), c(0, 0, 2), c(4, 1, 3)), lwid=c(1.0, 0.2, 5.0), lhei = c(1.5, 0.2, 4.0),

xlab = "ka", ylab = "Locations (by Longitude, then Latitude)", key = TRUE, key.title = NA)

dev.off()

print (proc.time() - time1)

time1 <- proc.time()

png_file <- paste(file_label, "heatmap_04", ".png", sep="")

png(png_file, width=960, height=960)

cluster_row <- hclust(parDist(X2), method = "ward.D2")

cluster_col <- hclust(parDist(t(X2)), method = "ward.D2")

# heatmap

X2_heatmap <- heatmap.2(X2, cexRow = 0.8, cexCol = 0.8, scale="none", dendrogram = "none",

Rowv = as.dendrogram(cluster_row),

Colv = as.dendrogram(cluster_col),

RowSideColors=rcol, ColSideColors=ccol, col=zcol, breaks=breaks, trace="none", density.info = "none",

lmat=rbind(c(6, 0, 5), c(0, 0, 2), c(4, 1, 3)), lwid=c(1.0, 0.2, 5.0), lhei = c(1.5, 0.2, 4.0),

xlab = "ka", ylab = "Locations (by Longitude, then Latitude)", key = TRUE, key.title = NA)

dev.off()

print (proc.time() - time1)